Computational Visual

Attention Systems (CVAS ) have gained a lot of interest during the last years.

Similar to the human visual system, VSAS detect regions of interest in images:

by “directing attention” to these regions, they restrict further processing to

sub-regions of the image. Such guiding mechanisms are urgently needed, since

the amount of information available in an image is so large that even the most

performant computer cannot carry out exhaustive search on the data.

Psychologists, neurobiologists, and computer scientists have investigated

visual attention thoroughly during the last decades and profited considerably

from each other. However, the interdisciplinary of the topic holds not only

benefits but also difficulties.

This seminar provides

an extensive survey of the grounding psychological and biological research on

visual attention as well as the current state of the art of computational

systems. It includes basic theories and models like Feature Integration

Theory(FIT model) and Guided Search Model(GSM).A Real time Computational Visual

Attention System VOCUS (Visual Object detection with a Computational attention

System) is also included. Furthermore, presents a broad range of applications

of computational attention systems in fields like computer vision, cognitive

systems, and mobile robotics.

Evolution has favored

the concepts of selective attention because of the human need to deal with a

high amount of sensory input at each moment. This amount of data is, in

general, too high to be completely processed in detail and the possible actions

at one and the same time are restricted; the brain has to prioritize. The same

problem is faced by many modern technical systems. Computer vision systems have

to deal with thousands, sometimes millions, of pixel values from each frame and

the computational complexity of many problems related to the interpretation of

image data is very high .The task becomes especially difficult if a system has

to operate in real time. Application areas in which real-time performance is

essential are cognitive systems and mobile robotics, since the systems have to

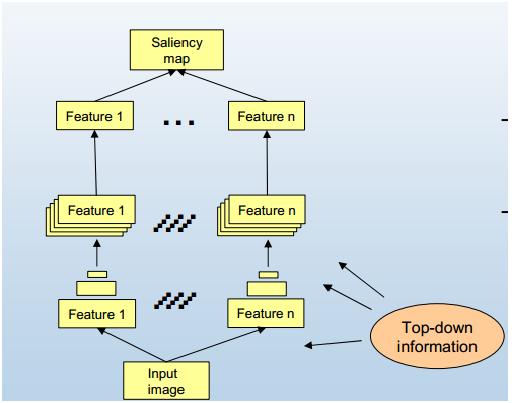

react to their environment instantaneously. Computational attention systems

compute different features like intensity, color, and orientations in parallel

to detect feature dependent saliencies.

Every stage director is aware of the

concepts of human selective attention and knows how to exploit them to

manipulate his audience: A sudden spotlight illuminating a person in the dark,

a motionless character starting to move suddenly, a voice from a character

hidden in the audience, these effects not only keep our interest alive, but

they also guide our gaze, telling where the current action takes place. The

mechanism in the brain that determines which part of the multitude of sensory

data is currently of most interest is called selective attention. This concept

exists for each of our senses; for example, the cocktail party effect is well

known in the field of auditory attention. Although a room may be full of

different voices and sounds, it is possible to voluntarily concentrate on the

voice of a certain person. Before going in detail about Computational Visual

Attention Systems, we must have an idea about Human Visual System.

Limitations

In the field of

computational systems, and applications, there are still many open questions

One important question is, which are the optimal features of attention and how

these features interact?. Although intensively studied, this question is still

not fully answered. Another one is related with the interaction of different

features. Most of the systems computes

only the local saliencies. The investigation of visual perception in dynamic

scenes still remains as a challenging area. There is no idea about the amount

of learning involved in the visual search and the money and memory used for

these mechanisms.

This seminar gives a

broad overview of computational visual attention systems and their cognitive foundations and aims to

bridge the gap between different research areas (psychological and biological

research areas). Visual attention is a highly interdisciplinary field and the

disciplines investigate the area from different perspectives. Psychologists

usually investigate human behavior on special tasks to understand the internal processes of the brain, often

resulting in psychophysical theories or models. Neurobiologists take a view

directly into the brain with new techniques such as functional Magnetic

Resonance Imaging (fMRI). These methods visualize which brain areas are active

under certain conditions. Computer scientists use the findings from psychology and biology to build improved

technical systems.

In this seminar we discussed about the most

influential theories and models in the field of CVAS (FIT, GSM) and also method

for further improving the general performance of a computational visual

attention system by a typical factor of 10. The

method uses integral images for the feature computations and reduces the

number of necessary pixel accesses significantly, since it enables the

computation of arbitrarily sized feature values in constant time. In contrast

to other optimizations which approximate the feature values, this method is

accurate and provides the same results as the filter-based methods. The computation of regions of

interest can now be performed in real time for reasonable initial image

resolutions (half VGA) and thus allow their use in a variety of applications.

Attention can now be used for feature tracking or for reselecting landmark

features for visual SLAM. Computational attention has gained significantly in

popularity over the last decade. First

of all, adequate computational resources are now available to study attentional

mechanisms with a high degree of fidelity.

0 comments: